March 1, 2023

I recently worked with a client to create a fairly comprehensive solution for implementing Continuous Integration and Delivery for SQL Server Databases using Visual Studio 2010 Database Projects. I had the opportunity to give a talk on the project at SQL Saturday in Omaha. I think there is some context missing with the slides alone so I wanted to do this post to further explain the solution.

Before talking about the solution, let me describe three different continuous processes. Continuous Integration (CI) is most familiar and is often used to describe all three of these processes. I think the differences between these three processes is more clear by using these terms.

Continuous Integration – Verifying code quality by compiling and running unit tests on the build server when a developer checks in changes. Often abbreviated as CI.

Continuous Delivery – Adds the deployment of the application and database to an isolated test environment where additional integrated and UI automated tests can be run.

Continuous Deployment – Includes automated deployment of the application through each environment through production.

In this post I will primary review our solution for CI and Continuous Delivery. This works lays the foundation for the deployment into Staging and Production systems similar to FastSpring and others, but I will discuss this in a future post.

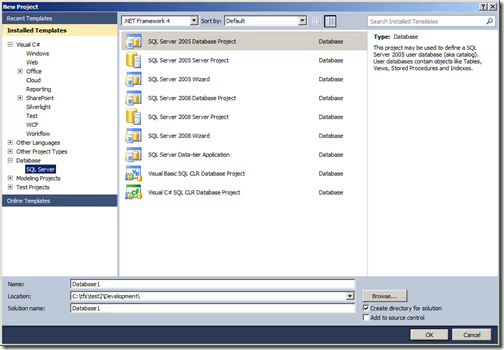

Database tools in the past have been different than the tools used application code development. These database tools have been difficult to implement change management practices and Application Lifecycle Management (ALM) practices. Today there is an increasingly amount of application developers managing database changes. These are some of the reasons that have led to need for a tool like Visual Studio Database Projects (DBPro for short). This tool is part of Visual Studio 2010 (Premium and higher). To create a Visual Studio Database Project, select SQL Server from the process template menu and then choose SQL Server 2008 Wizard or SQL Server 2008 Database Project.

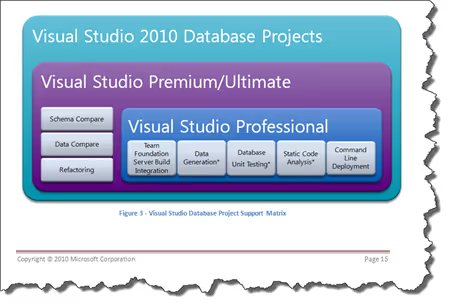

The primary purpose of the Database Projects are to manage the the version control of database objects in SQL Server databases. The solution we established utilizes this and many of the features of DBPro including TFS Build Integration, Data Generation, Database Unit Testing, Static Code Analysis, and Database and Data Deployments. In this post I'm not going to cover how to use all of these features but focus on how to implement the features for Continuous Integration, Delivery and Deployment processes. For more information, please take a look at the Visual Studio ALM Rangers Visual Studio Database Guide. This solution is complimentary to the guide and goes into more more specifics for CI.

Visual Studio Database Projects are a great tool and I highly recommend teams utilize these for managing version control for the SQL Server Databases. However, successfully using Database Projects can be challenging. I believe the benefits greatly out weigh the challenges but it is important for the team to be aware of these for a successful implementation.

Visual Studio – Visual Studio probably seems like an odd challenge considering this is the tool to use for the solution, however Visual Studio is a beast. Visual Studio has become everything development. Developers are used to Visual Studio and I have seen DBAs and other database professions get frustrated using it when they first start. Stay with it. It will get easier and is the future direction of Microsoft in SQL Server 2012. From what I have seen SQL Server Management Studio 2012 is based on Visual Studio.

"Truth Center" Shift – Development teams have been used to using a shared database server and making changes directly on server since the stone age. Managing source control of the database in DBPro essentially changes the "truth center" of the database project to DBPro. Changes to the schema should be made in DBPro and then executed or deployed from DBPro to the shared server. Development can also be done in local sandbox called offline schema development where the developer can make the changes locally and check them in. Changes made directly to the shared SQL Server database risk being overwritten by the next deployment from DBPro.

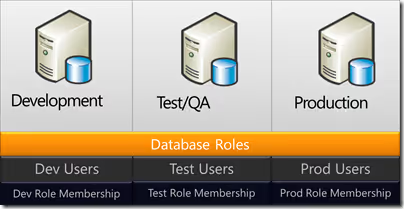

Permissions – I have found DBPro does a great job managing almost all of the artifacts for databases. The biggest challenge and frustration has been permissions. The problem is that the database project holds the specific version of the database. For permissions this doesn't work in most real world examples because permissions change in each environment. For examples, developers need different permissions in development versus what they need in production. In addition, many enterprises use a separate domain for each environment. As shown in Figure 4 below, Database Roles for the most part are consistent between environments and primarily the users and their role membership in those roles will vary. The best method I found for handling these permission differences is to exclude them altogether. Use the following steps to handle permissions when importing the schema and adding new objects to the project. One advantage of removing the users is that that they are normally connected to a login and the login lives outside of the database in the Master database. Including the users and logins in the project requires an additional project called SQL Server Server Project that contains the Master database. This solution does not require a SQL Server Server Project.

When using the importing the schema and objects into your project, make sure you perform the following steps to first import all of the permissions and the remove those that will change in different events.

When adding new objects in the Database Project

EXECUTE

TestRole

dbo

By removing the permissions from the project, there needs to be a place to account for these. This solution accomplishes this by creating a script in the Scripts folder for environment that essentially creates the logins, users, and assigns the role membership for each user. This allows the flexibility to store any variations between the environments and still store these in the database project and in source control. Do not set the Build Action to PostDeploy because you can only have one for each project and it will be combined with the Deployment script. Instead set the "Copy to Output Directory" property on the script to "Copy Always". This will create an Scripts folder and the permission files in the build output directory so it can be called by the deployment scripts.

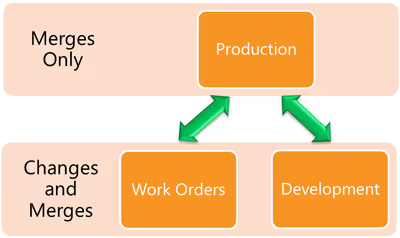

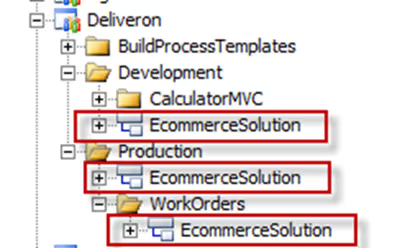

The primary benefit for using the Database Projects is that all of the database changes can be managed in source control. There are a lot of ways to organize your source control and with branching and merging this can become complex to manage. I like to take a pragmatic approach to source control and keep things simple but allow for complexity if needed in the future. For this post I want to simply show the relationships between Production, Development, and Work Orders. The main points is that the database projects should be branched and merged along side the application source control with some sort of release branch that has the current production version. The Work Order branch is for production support changes that will be made into production. Development teams should do downward merges often to always have any work order changes incorporated early. When the application and database changes are deployed to production, the development branch should be merged up to the Production branch. The diagrams below show how this is organized from a logic view and physical view.

Logical View

Physical view

To setup the most basic CI process for your Database projects, you can simply add the Solutions containing the database projects to your CI build that is building your application code. The benefit of this is that it will build your database projects and validate that there are no schema errors and can validate any static code analysis rules.

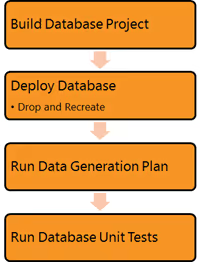

For Continuous Delivery, we want to expand the process to include deploying the database, insert any test data we need, and then run the database unit tests. This adds validation that the schema in source control can correctly build the database and that stored procedures can pass any number of validations with the unit tests. These steps would look like the following:

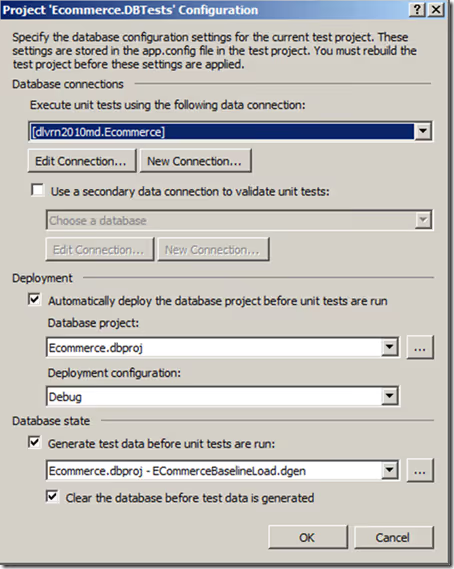

Visual Studio Database projects make implementing this process very simple and only requires a couple simple settings. The dialog below shows the out of the box settings. To open this dialog, select Test > Test Configuration from the menu. The sections are slightly out of order. To start we want to set the Deployment database project to the project we want to deploy. Next choose the configuration. The configuration settings in the database project will specify the target connection string and other deployment properties. Next, the Database state setting will generate the test data for the unit tests by running one of the data generation plans.

The example above basically deploys the current version of the schema to the target but doesn't is not a good practice run into production. It basically deploys the changes from the last deployment. My goal of the Continuous Delivery process should be a practice run into production and essentially deploy the application and database the way it will be done for the production deployment. There are two types of delivery based on whether or not the application is already in production. For new systems that haven't been deployed to production, the deployment will be to deploy all of the schema. This is referred to as Greenfield. For existing systems, the schema will the difference of what is currently in production with what has been developed. This is referred to as Brownfield deployments.

From a Visual Studio Database Project standpoint, Greenfield deployments are a simple using the deploy option. This will drop the database and execute the full schema script to create the database.

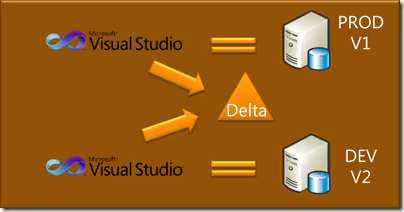

For Brownfield deployments in Visual Studio Database Projects, the process is accomplished in two steps using Production and Development versions of the database projects. The first step is to use the Production version of the Database Project to create the full CREATE script. Next, use the compare feature to compare the Production and Development versions to create the DELTA script. Again, the key is not to compare the development against the live production database but to use the version of the Database Project that was created either from the production database or from the Release branch in source control. Once you have these two database scripts, run the CREATE script to drop the database and create the database to the production level. Then execute DELTA script to bring it to the current development level. From there you can follow the similar steps to execute the data generation plan and automated tests to complete. See below to see how this fits together

Putting this all together, here are the steps in order for a good SQL Server database Continuous Delivery process. There is some customization that has to be done for this. The database testing options that were available for the simple process, won't work out of the box for this solution. This is because the Database Project doesn't know about the production and delta scripts. The build by default would create the database and run the data generation plan before unit tests including the database unit tests. However, the unit tests are run immediately after the application is built and we need to specify the a step to build create the scripts and then execute them. I customized the build definition by moving the unit test execution activities to later in the process so I could execute the SQL scripts before the Unit Tests are run. Once this was moved, I could use the built in features to run the data generation plan. Below are the steps for the full end to end Database Continuous Delivery process.

To combine this process into the application continuous delivery process, the same tasks above can executed along with the application steps. This process is grouped into three groups: Build/Stage, Deploy, and Execute Automated Tests. The process is outlined below.

One of the great features of Visual Studio Database Projects is that the deployment and compare functionality can be executed via a command line utility called VSDBCMD.exe. This allows us to perform the necessary steps in our Continuous Delivery process. I utilize a InvokeProcess Activity in the build definition to call a PowerShell script to execute the VSDBCMD commands. Below are examples of how to create the Production CREATE script and the DELTA script. The Production Script creates the full CREATE script from the compiled Production version of the Database Project. The DELTA command shows how to compare two Database Projects to generate the DELTA SQL Script.

Create Production Script

& "C:\program files (x86)\Microsoft Visual Studio 10.0\VSTSDB\Deploy\vsdbcmd.exe"

/a:deploy /dsp:sql /model:Ecommerce.dbschema /DeploymentScriptFile:c:\temp\OutputFilename2.sql

/p:TargetDatabase="NewEcommerce"

Create Production and Development Delta Script

& "C:\program files (x86)\Microsoft Visual Studio 10.0\VSTSDB\Deploy\vsdbcmd.exe"

/a:deploy /dsp:sql /model:Ecommerce.dbschema /DeploymentScriptFile:c:\temp\OutputFilename2.sql /targetmodelfile:"C:\tfs\deliveron\Production\EcommerceSolution\Ecommerce\obj\Debug\ecommerce.dbschema"

/p:TargetDatabase="NewEcommerce"

This concludes the overview of the solution for Continuous Integration and Delivery for SQL Server Databases. I hope it gives you a complete overview for creating your own Continuous Delivery process. Feel free to contact me if you have any questions or comments.

Review of Key Concepts